With most businesses being data-centric, A/B testing has gained greater traction as businesses are keen on ensuring that their applications meet their customer interests in their application usage. The A/B testing process isn’t as easy as it appears to be. There are many myths and wrong practices prevailing in the industry, which is why most of the testing processes are failing. To clear myths and help businesses to conduct A/B testing successfully, here we discuss the A/B testing process in detail and its methodology.

What is the A/B Testing Process?

A/B testing is a statistical way of comparing two or more versions. It determines which version performs better but also helps organizations understand if the difference between the two versions is statistically significant.

Even Harvard Business Review on A/B states that "A/B testing, at its most basic, is a way to compare two versions of something to figure out which performs better. While it’s most often associated with websites and apps, Fung says the method is almost 100 years old."

Why Do Businesses Need an A/B Testing Process?

A common dilemma that companies face is that most think they understand the customer well. But customers do behave more contrarily than companies predict, either consciously or subconsciously. Most often, users either don't know or are unable to make out strong reasons why they decide, and instead they just do it. When running an A/B test, you might find the results can often be very embarrassing, and customers can behave much inversely to what you would think. So, it's better to conduct tests rather than relying on intuition.

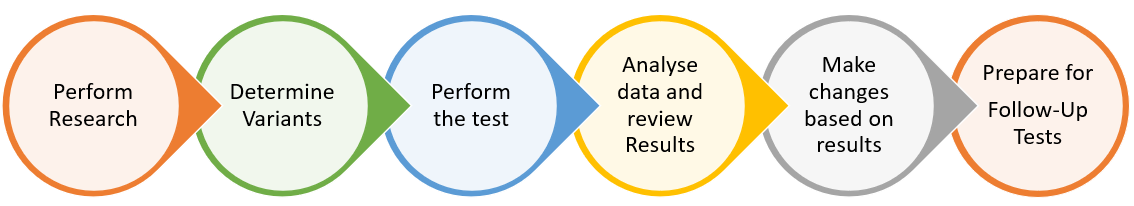

A Successful A/B Testing Process

Understanding How to Run an A/B Testing Process with an Example

For example, in marketing or web design, one might be comparing two different landing pages with each other or two different newsletters.

Let's say you take the layout of the page and move the content body to the right versus the left. Maybe you change the call-to-action from black to blue, or your newsletter subject line has the word "offer" in Version A and the word "free" in Version B.

For A/B testing to work, stating your criteria is key for success prior to the beginning test. What is your hypothesis? What do you think will happen by changing to Version B?

Maybe you're hoping to increase the conversion rate, newsletter signups, or increase your open call criteria for success ahead of time. Also, you may want to make sure that you split your traffic into two (it doesn't have to be 50-50 and you want to figure out what the minimum number of people needed to run your A/B test to achieve statistically significant results. One can do this with multiple versions.

Conducting A/B Testing of the Website

A powerful website relies on different aspects, and interface designs are one of the important ones. If a website has a professional and pleasant design, then it is likely that more people will spend time on the website.

Some developers like utilizing A/B test analysis by comparing two different versions of a website with regards to design. This means that some elements of the website are different between the two. Typically, a web developer makes both designs available to the users and then compares which of the two will be preferred by visitors.

Basically, send your users the two websites through a random system and choose parameters to measure which of the versions works better. Some people may count success based on how many people choose to sign up or the number of sales that a certain version receives.

There are certain things to remember with A/B testing. For example, you should be able to collect enough data before you conclude and follow the guidelines for statistical confidence to make sure that your results are enough to create a conclusion, and always have both versions of your design tested at the same time.

Since the results may be different from one day to the next, don't assume that your instincts are correct. Always wait for the results before you form the conclusion that one version is better than the next. Many times, you will notice that users will respond better to the one that you like. Conduct as many A/B tests as you would like to. This way one can have accurate results and get the preferable design for your site.

Critical Things to Get Accurate A/B Test Analysis Results

The following procedure is critical to getting accurate results:

- Choose what will be tested

- Set proper goals

- Analyze the data

- Set elements to A/B test

- Create a variant

- Design your test

- Gather/ accumulate data

A/B testing is a way to collect information about design changes of a website. Analyzing the results with the A/B test statistics is the key to a successful user experience.

Performance Metrics for A/B Testing Process and Analysis

Here are a few key performance metrics that help organizations evaluate the A/B testing process results and take a key role in measuring its success. Performance metrics that companies can track in A/B testing include:

- Rate of total conversions

- Click-through rate on CTAs

- Visitor bounce rate

- User retention rate

- Average session duration

- Time that the user spends on scrolling

- Revenue metrics, etc.

Organizations must follow the best practices for using key statistics and analyzing the outcomes of the A/B testing process in order to design a successful A/B testing process and maximize output from KPIs. Contact our V-Soft Consulting experts for more details on the A/B testing process.

About Author

Raj Kumar works as a Sr. Test Engineer with V-Soft Consulting India Pvt., and has 8+ Years of Software Testing experience. He has good knowledge in Agile process methodology, ServiceNow, and AI domains. He has gained experience in various testing areas that include, Web services, Mobile Testing, GUI, Functional, Integration, System, Ad-hoc, Usability, Data Base, Smoke, Regression and Retesting.